The Fog Layer: Computing That Lives Between Cloud and Ground

The rapid expansion of the Internet of Things (IoT), edge devices, and real-time applications has transformed the traditional computing paradigm. While cloud computing has long been the backbone of large-scale data processing, it often struggles with latency, bandwidth constraints, and the sheer volume of real-time data generated at the edge. Enter fog computing, an intermediate layer between cloud and ground-level devices that enables faster processing, localized decision-making, and efficient resource management.

This article explores the concept of the fog layer, its fog computing architecture, applications, advantages, challenges, and the future of decentralized computing ecosystems.

Paradox Attacks: Malware That Only Activates When Observed

What Is the Fog Layer?

The fog layer, sometimes called fog computing, represents a distributed computing infrastructure that extends the cloud closer to the devices generating the data. Unlike cloud computing, which centralizes processing in distant data centers, the fog layer operates on local nodes, gateways, and edge devices.

By bridging the gap between cloud and ground, fog computing architecture allows:

- Real-time processing for latency-sensitive applications

- Localized storage to reduce bandwidth usage

- Enhanced security and privacy by processing data closer to the source

- Intelligent orchestration of IoT networks

Essentially, the fog layer acts as a “buffer” or “middleware” between massive cloud servers and the numerous, geographically dispersed IoT devices in smart environments.

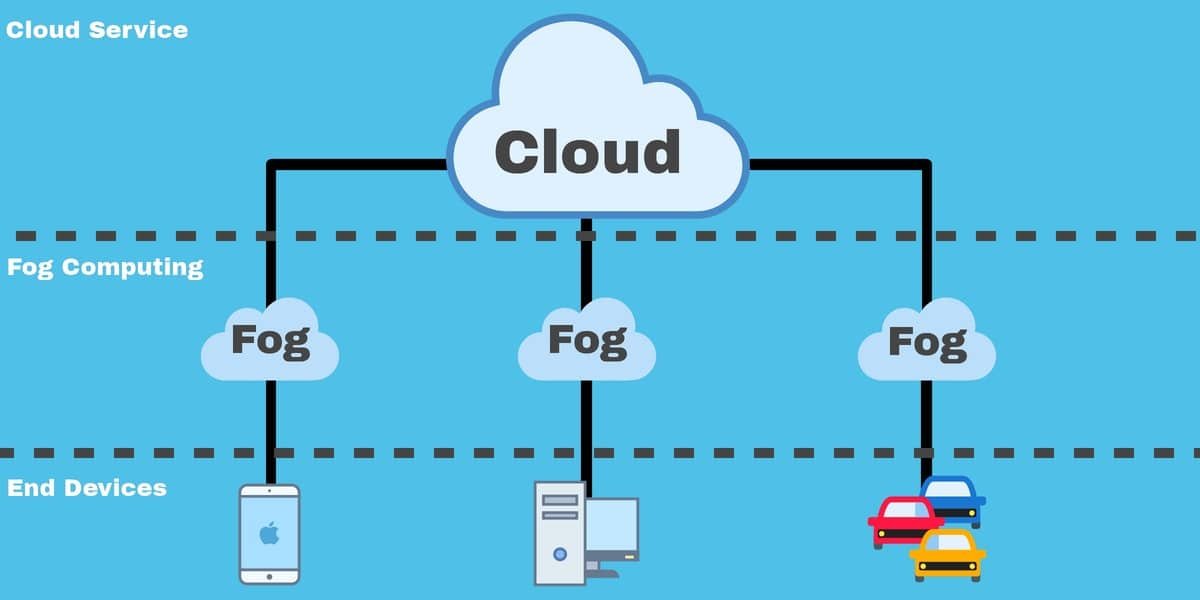

The Structure of Fog Computing Architecture

Understanding fog computing architecture is key to appreciating its role in modern computing. It is generally composed of three main layers:

1. Device Layer (Ground Layer)

This layer includes all IoT devices, sensors, actuators, and embedded systems generating raw data. Devices at the ground layer are typically resource-constrained, with limited computing power and storage capabilities.

Key functions at this layer include:

- Data collection from physical sensors

- Initial preprocessing or filtering

- Communication with the fog layer for further processing

2. Fog Layer (Intermediate Layer)

The fog layer resides between the cloud and the device layer. It consists of edge servers, gateways, routers, and local micro-datacenters. This layer is responsible for:

- Aggregating and preprocessing data from multiple devices

- Performing real-time analytics and decision-making

- Providing temporary storage to reduce cloud bandwidth usage

- Enabling local orchestration for IoT networks

By performing these functions closer to the devices, the fog layer minimizes latency and allows rapid response in time-sensitive scenarios.

3. Cloud Layer (Top Layer)

The cloud layer is the centralized data center infrastructure responsible for long-term storage, large-scale analytics, and deep learning model training. Data that requires historical analysis, global optimization, or intensive computation is sent from the fog layer to the cloud.

Together, these three layers create a fog computing architecture that balances processing, storage, and intelligence across distributed nodes.

Core Components of Fog Computing Architecture

Several components define the effectiveness of a fog layer:

Fog Nodes

Fog nodes are intermediate servers or gateways located between IoT devices and the cloud. They handle data aggregation, preprocessing, and analytics, reducing the need for constant cloud communication.

Network Infrastructure

A robust network infrastructure, often leveraging 5G, LPWAN, or Wi-Fi 6, is essential to connect devices, fog nodes, and cloud servers. Efficient communication protocols like MQTT or CoAP ensure minimal latency.

Orchestration Systems

Orchestration tools manage resource allocation, load balancing, and fault tolerance across fog nodes. These systems ensure that fog computing architecture operates reliably and scales dynamically.

Security Frameworks

Security is paramount in the fog layer. Encryption, authentication, and access control mechanisms protect sensitive data processed at local nodes. In some cases, sensitive information can be processed and anonymized at the fog layer before being transmitted to the cloud.

Advantages of Fog Computing Architecture

1. Reduced Latency

One of the primary advantages of fog computing is low-latency processing. Applications like autonomous vehicles, industrial automation, and smart traffic management require real-time decisions that cloud-only systems cannot provide efficiently. Fog computing architecture ensures that critical analytics occur near the data source.

2. Bandwidth Optimization

Transmitting raw data from thousands of IoT devices to the cloud can overwhelm networks. The fog layer aggregates and preprocesses data, sending only essential information to the cloud, thus optimizing bandwidth usage.

3. Enhanced Security and Privacy

By processing sensitive data locally, the fog layer reduces exposure to cyberattacks during transmission. Data anonymization, encryption, and access controls within the fog computing architecture further safeguard privacy.

4. Scalability

Fog computing allows incremental scalability. Organizations can add fog nodes or gateways to accommodate additional IoT devices without overhauling the entire infrastructure.

5. Improved Reliability

Local processing reduces dependence on cloud availability. Even if cloud servers experience downtime, fog nodes can maintain core operations, ensuring continuous functionality in critical systems.

Applications of Fog Computing

Smart Cities

Smart city initiatives heavily rely on fog computing for real-time decision-making:

- Traffic management systems process data from connected vehicles and roadside sensors to optimize traffic lights and reduce congestion.

- Public safety monitoring leverages video analytics at fog nodes to detect incidents without overloading cloud resources.

- Environmental monitoring sensors analyze air quality, noise, and weather locally, triggering immediate alerts when necessary.

Industrial IoT (IIoT)

In manufacturing, fog computing architecture enables predictive maintenance, quality control, and operational efficiency:

- Sensors on production lines detect equipment anomalies in real-time.

- Fog nodes analyze vibration, temperature, and pressure data locally to prevent machinery failure.

- Temporary storage of operational data reduces cloud dependency while supporting quick decision-making.

Healthcare and Telemedicine

Healthcare IoT devices generate massive amounts of data. Fog computing facilitates:

- Real-time monitoring of patient vitals via wearable devices

- Local processing of imaging data for faster diagnosis

- Secure handling of sensitive patient data before transmitting anonymized insights to cloud servers

Autonomous Vehicles

Self-driving cars require split-second decisions:

- Fog nodes installed at traffic intersections process data from multiple vehicles to manage traffic flow.

- Vehicle-to-infrastructure (V2I) and vehicle-to-vehicle (V2V) communication rely on localized fog computing for collision avoidance and route optimization.

Retail and Logistics

Fog computing enhances operational efficiency in retail and supply chain management:

- Inventory management systems analyze shelf sensors and RFID data locally to trigger restocking alerts.

- Delivery fleets optimize routes in real-time by processing traffic and weather data at edge nodes.

Technical Challenges of Fog Computing Architecture

Despite its advantages, fog computing faces several challenges:

1. Resource Constraints

Fog nodes have limited processing power and storage compared to cloud data centers. Balancing computation-intensive tasks with local capacity requires careful orchestration.

2. Security and Privacy Risks

Although local processing reduces transmission risk, fog nodes themselves can be vulnerable to physical tampering, malware, or unauthorized access. Implementing robust security frameworks is essential.

3. Network Complexity

Coordinating thousands of devices, fog nodes, and cloud servers introduces network management complexity. Ensuring low latency and high reliability requires advanced network protocols and dynamic load balancing.

4. Standardization Issues

Fog computing is still evolving, and there is a lack of standardized protocols or architectures. Compatibility across devices, vendors, and cloud platforms can be challenging.

5. Data Management

Handling heterogeneous data streams from multiple devices requires effective preprocessing, storage, and analytics pipelines. Ensuring that relevant insights are extracted without overwhelming fog nodes is a key challenge.

Integration with Edge and Cloud Computing

Fog computing complements both cloud and edge computing. While edge devices perform extremely localized computation (e.g., a single sensor or wearable), the fog layer aggregates data from multiple devices and nodes, providing intermediate intelligence before sending essential insights to the cloud.

This multi-tier architecture ensures:

- Reduced latency for time-sensitive decisions

- Efficient bandwidth utilization

- Scalable and reliable distributed intelligence

In this way, fog computing architecture forms a continuum between the cloud and ground-level devices, delivering adaptive intelligence across the network.

Emerging Trends in Fog Computing

5G and Beyond

High-speed, low-latency networks like 5G accelerate the deployment of fog computing. Fog nodes integrated with 5G infrastructure can handle massive IoT data streams in real-time, enabling applications in autonomous vehicles, smart cities, and industrial automation.

AI Integration

Artificial intelligence enhances fog computing by enabling predictive analytics at the fog layer. Machine learning models can process data locally, providing near-instant insights without relying on cloud-based computation.

Serverless Fog Computing

Serverless frameworks are being adapted for fog computing, allowing dynamic resource allocation. Functions are executed on-demand at fog nodes, improving efficiency and scalability while reducing idle resource usage.

Blockchain for Security

Blockchain technologies are being integrated into fog computing architecture to ensure secure, tamper-proof data transactions between nodes. This is particularly useful for supply chains, healthcare, and finance applications.

Energy-Efficient Fog Nodes

Green computing initiatives are driving the development of energy-efficient fog nodes, reducing the carbon footprint of distributed processing and making fog computing sustainable at scale.

Case Studies in Fog Computing

Smart Traffic Management in Barcelona

Barcelona deployed a fog-based traffic system that processes data from IoT sensors on vehicles and roads. Local fog nodes analyze congestion patterns and optimize traffic signals in real-time, reducing commute times and emissions.

Industrial Automation in Germany

Manufacturers in Germany use fog nodes on production lines to monitor machinery health. Predictive maintenance alerts are generated locally, minimizing downtime and preventing costly failures.

Healthcare Monitoring in Remote Areas

Fog computing enables real-time patient monitoring in rural healthcare centers. Wearable devices transmit vitals to fog nodes for immediate analysis, while anonymized summaries are sent to central hospitals for long-term monitoring.

The fog layer is rapidly transforming the computing landscape, providing a critical bridge between cloud intelligence and ground-level devices. By enabling low-latency processing, efficient bandwidth usage, and localized decision-making, fog computing architecture is becoming indispensable for IoT, smart cities, industrial systems, healthcare, and more.

FAQs

1. What is fog computing architecture?

Fog computing architecture is a distributed computing framework that operates between cloud servers and edge devices. It processes data locally on intermediate nodes, reducing latency, bandwidth usage, and improving real-time decision-making.

2. How does the fog layer differ from edge computing and cloud computing?

Edge computing occurs directly on devices or sensors, while cloud computing centralizes processing in distant data centers. The fog layer sits between them, aggregating and analyzing data from multiple edge devices before forwarding selected information to the cloud.

3. What are the key components of fog computing architecture?

The core components include fog nodes (local servers or gateways), network infrastructure for low-latency communication, orchestration systems for resource management, and security frameworks to protect data during local processing.

4. What are the benefits of implementing a fog layer?

Benefits include reduced latency, optimized bandwidth usage, enhanced privacy and security, scalability, improved reliability, and real-time analytics for IoT, industrial, and smart city applications.

5. What challenges are associated with fog computing?

Challenges include limited resources on fog nodes, security vulnerabilities, network complexity, lack of standardization, and efficient data management across heterogeneous devices.

6. In which industries is fog computing most useful?

Fog computing is widely applied in smart cities, industrial IoT, healthcare, autonomous vehicles, retail, logistics, and any environment requiring low-latency, real-time processing of distributed data.

Conclusion

The fog layer represents a transformative approach to distributed computing, bridging the gap between cloud servers and edge devices. Fog computing architecture enables localized processing, real-time analytics, and efficient resource utilization, addressing the limitations of traditional cloud-centric systems.

By deploying fog nodes, organizations can reduce latency, optimize bandwidth, enhance privacy, and maintain resilient operations across industries such as healthcare, smart cities, manufacturing, and autonomous transportation. As IoT ecosystems expand and real-time decision-making becomes increasingly critical, fog computing will play a central role in creating responsive, intelligent, and secure distributed systems.

Virtual Nation: How Data Centers Are Becoming Sovereign States